- Over the past several years, there have been tremendous advances in the field of quantum computation, but even greater amounts of hype surrounding those advances.

- A few years ago, quantum supremacy was achieved for the first time, where a quantum computer performed a calculation millions of times faster and more efficiently than a classical computer.

- But that’s a far cry from many of the technological advances being promised, including achieving quantum supremacy for a single practical problem. Here’s how to separate fact vs. fiction.

In our everyday experience, the world is 100% measurable, deterministic, and independent of the observer. The glass is either on the table in an unbroken state, or it’s on the floor in a shattered state, regardless of when or even whether you measure or observe it. The three marbles in your bag are definitively colored red, green, and blue, and no matter how you shake that bag or for how long, the red marble remains red, the green marble remains green, and the blue marble remains blue. And if you look at that quarter that somehow fell onto your nightstand long ago, it will always behave as though either “heads” or “tails” is facing up, never as though it’s part-heads and part-tails, simultaneously, at once.

But in the quantum Universe, this isn’t necessarily the case. A radioactive atom that remains unobserved will exist in a superposition of “decayed” and “undecayed” states until that critical measurement is made. The three valence quarks making up your proton may all have a definitive color anytime you measure them, but exactly what color you observe is guaranteed to not be constant over time. And if you shoot many electrons, one-at-a-time, through a double slit and don’t measure which slit it goes through, the pattern you see will indicate that each electron went through both slits simultaneously.

This difference, between classical and quantum systems, has resulted in both scientific and technological revolutions. One field that’s only now emerging is quantum computing, carrying the fascinating notion of quantum supremacy along with it, but also spawning a large series of dubious claims and misinformation. Here’s an explainer about quantum supremacy and the current state of quantum computers to help you separate fact from fiction.

Let’s start with an idea you’re probably familiar with: the notion of an everyday computer, also known as a classical computer. Although calculating machines and devices had been around for a long time, well prior to the 20th century, it was Alan Turing who gave us the modern idea of a classical computer in the form of what’s now known as a Turing machine.

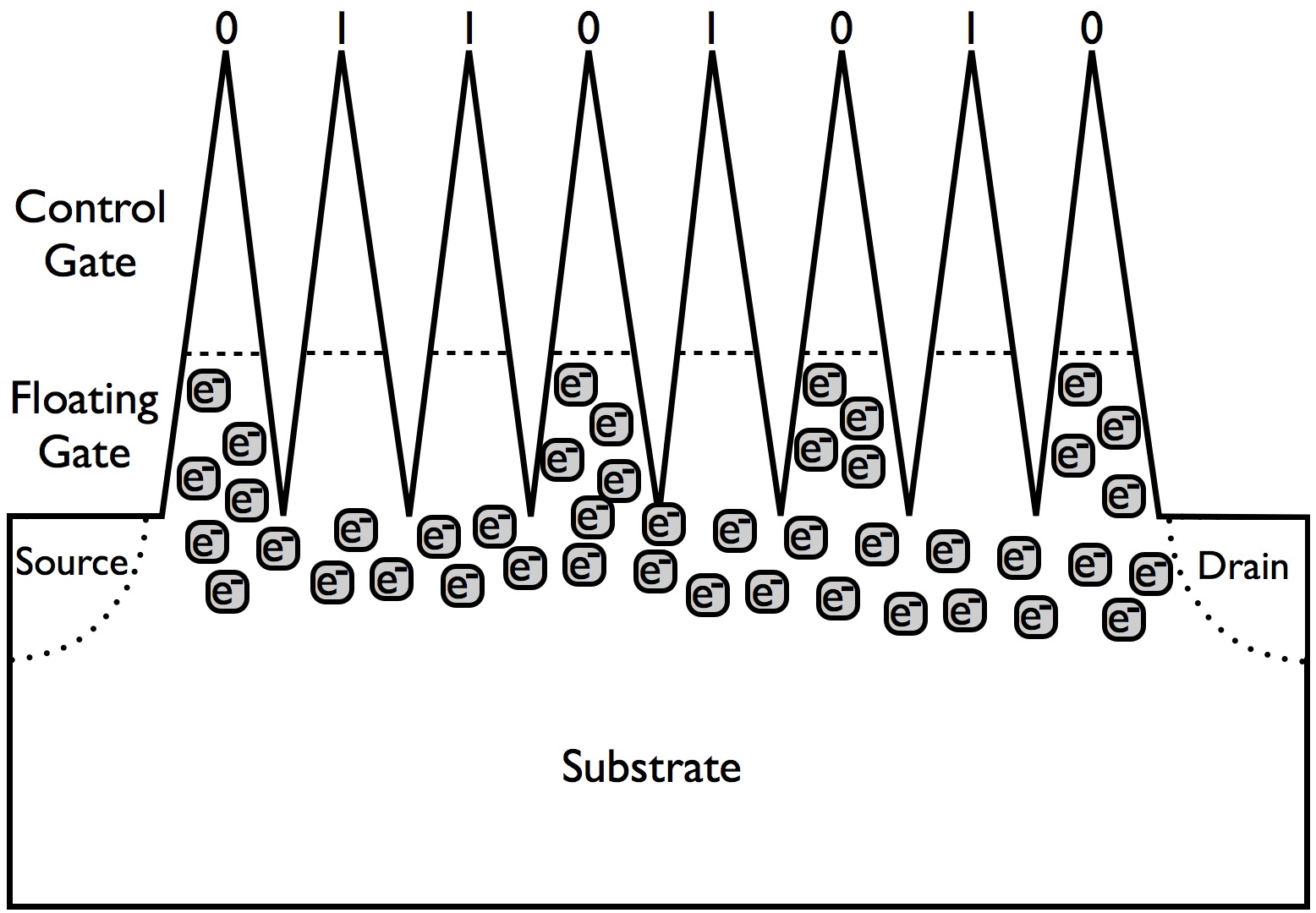

The simple version of a Turing machine is that you can encode any type of information you like into bits: or binary (with only two options) components that, for example, could be represented by 0s and 1s. You can then apply a series of successive operations to those bits (for example, operations such as “AND,” “OR,” “NOT,” and many more) in the proper order to perform any sort of arbitrary computation that you had in mind.

Some of those computations will be easy to encode and easy for the computer to perform: they require only a small number of bits, a small number of operations, and a very short time to compute them all. Others will be hard: difficult to code, computationally expensive for the computer to perform, and potentially requiring large numbers of bits, large numbers of operations, and long computing times. Regardless of your desired computation, however, if you can design an algorithm, or method, for successfully performing any computational task, you can program it into a classical computer. Eventually, given enough time, your computer will finish the program and deliver you the results.

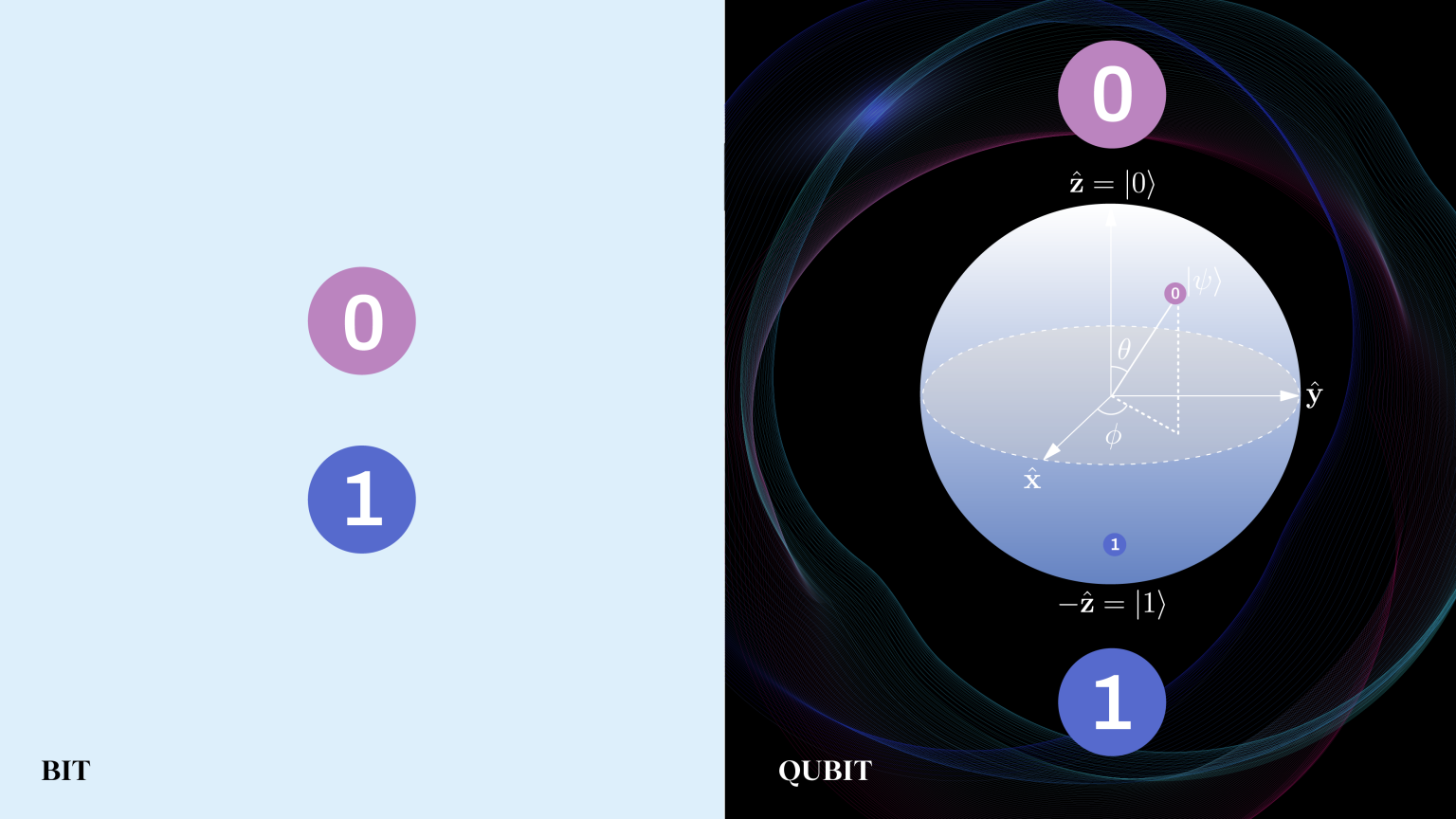

However, there’s a fundamental difference between this type of “classical computer” (that works exclusively with classical bits and classical operations) that we just described and a “quantum computer,” where the latter was purely a theoretical construct for many decades. Instead of regular bits, which are always in a state that’s known to be “0” or “1” with no exceptions, regardless of how or even whether you measure them or not, quantum computers use what are known as qubits, or the quantum analog of bits.

While qubits can take on the same values that classical bits can take on — “0” or “1” in this case — they can also do things like exist in an intermediate state that’s a superposition of “0” and “1” simultaneously. They can be part-way between fully 100% “0” and fully 100% “1” in any amount that sums to 100% in total, and the amount of “0” and the amount of “1” that a qubit possesses can change both as a result of operations performed on the qubit and also due to simple time-evolution.

But when you actually go to make that critical measurement of a qubit, and you ask it, “what quantum state it is actually in,” you’ll always see either a “0” or a “1” in your measuring device. You’ll never see that in-between value directly, even though you can infer that, based on the qubit’s effects on the overall outcome of the system, it must have simultaneously been a mix of “0” and “1” while the computation was taking place.

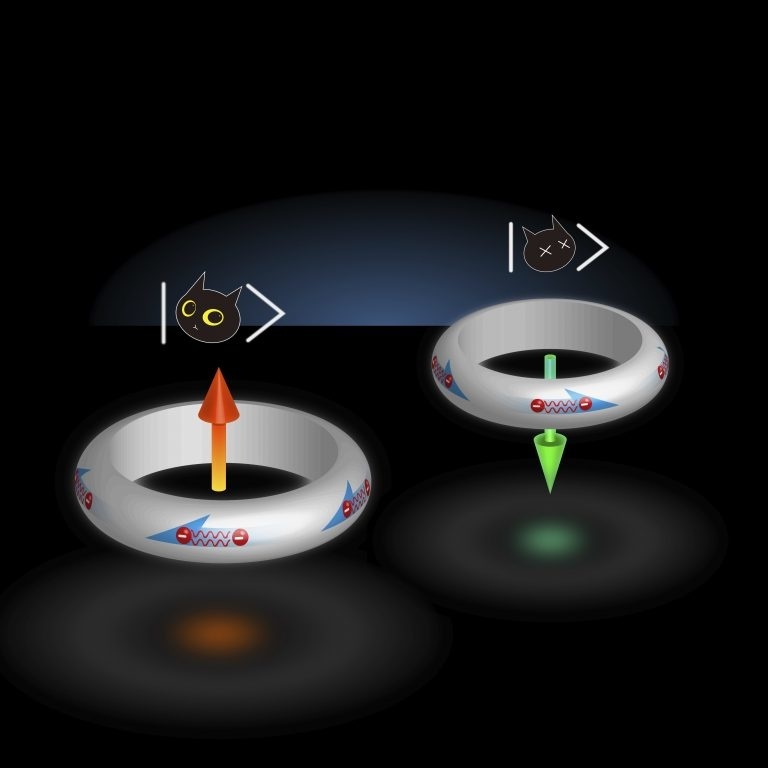

A qubit is just another example of what we call a two-state quantum mechanical system: where only two outcomes can possibly be measured, but where the exact quantum state is not definitively determined until that critical measurement is made. This applies to many quantum mechanical systems, including:

- the spin of an electron, which can be “up” (+) or “down” (-) in any direction that you choose to measure it,

- the state of a radioactive atomic nucleus, which can be in either an undecayed state (the same as an initial state) or in a decayed state,

- or the polarization of a photon, which can be either left-handed or right-handed relative to its direction of propagation,

where each of these systems behaves as though it were in a superposition of both possibilities, right up until those critical measurements are made and a final state is definitively determined to be one of the two measurable possibilities.

Qubits have something very important in common with classical bits: whenever you measure them, you’ll always see them in one of two states: the “0” state or the “1” state, with no exceptions and no in-betweens. However, they also have a very important difference: when you perform computational operations on a qubit, the qubit isn’t in a determinate state (of either “0” or “1”) like a classical bit is, but rather lives in a state that’s a superposition of “0” and “1,” like a qubit version of Schrödinger’s cat. It’s only once all the computations are done and you measure your final results that the final state of that qubit is fully determined: and where you’ll find out that it’s either “0” or “1.”

The computational difference between a “bit” and a “qubit” is very much like the quantum mechanical difference between a “classical two-state system” and a “quantum two-state system,” where even though you’re only going to get two possible outcomes in the end, the probabilities of getting “outcome #1” and “outcome #2” obey wildly different rules for the quantum system as opposed to the classical system. Whereas with a classical system, you can provide:

- the initial conditions,

- the algorithm of operations that will affect the system,

and then get a prediction for the final state of your system as a result, for a quantum mechanical system, you can only get a probability distribution as a prediction for your system’s final state. In the quantum case, only by performing the critical experiment over and over again can you hope to match and produce your predicted distribution.

Now, here’s where things get a little counterintuitive: you might think that classical computers are good tools for solving classical (but not quantum) problems, and that quantum computers would be required to solve quantum problems. It turns out, however, that one of the most important ideas in computer science — the Church-Turing thesis — directly contradicts that notion, stating that any problem that can be solved by a Turing machine, using only classical bits and classical operators, can also be solved by a computational device: i.e., a classical computer.

Just as we can solve problems that involve classical waves with classical mathematics and on classical computers, we can solve problems that involve quantum mechanical waves in the same fashion. The computational device is irrelevant: whether it’s a calculator, laptop, smartphone, supercomputer, or even a quantum computer (which can solve classical problems, too), a problem that could be solved by a Turing machine can be solved by any of these computers.

However, that doesn’t mean that all methods of solving problems are equally efficient at doing so. In particular, you could imagine trying to simulate an inherently quantum mechanical system — using not just qubits instead of regular bits, but also inherently quantum operators (or their computational equivalent, quantum gates) — where using a quantum computer might give you a tremendous advantage in efficiency, speed, and computational time over a classical computer.

A very controversial extension to the Church-Turing thesis — creatively named the extended Church-Turing thesis — basically asserts that you can’t do this. Instead, it claims that a Turing machine can always efficiently simulate any computational model, even a computational model that’s heavily (or even fully) quantum in nature.

That’s the crux of the idea behind Quantum Supremacy, and the related idea of Quantum Advantage. (Although some use these terms synonymously, there’s an important distinction that’s becoming more and more commonplace.)

- To achieve Quantum Supremacy, you’d have to disprove the extended Church-Turing thesis, where the easiest way to do so would be to provide a counterexample. In other words, if you can state a computational problem that is computationally expensive for a classical computer using all known algorithms and techniques, but that is much easier and less computationally expensive for a quantum computer, and you can demonstrate a vast speed-up in computational time using a quantum computer, then Quantum Supremacy will have been achieved.

- On the other (somewhat more ambitious) hand, you can attempt to achieve what’s known as Quantum Advantage, which would be achieving Quantum Supremacy for a problem that’s actually relevant to the real world. This could mean for a physically interesting but inherently quantum system, like electrons traveling through a double slit or phonons in a condensed matter system, or it could be for a complex classical system where using qubits and quantum gates provides a notable speed-up using fewer resources.

Quantum Supremacy, by this definition, was first (likely) achieved back in 2017-2019, but Quantum Advantage still appears to be a long way off, with several important caveats.

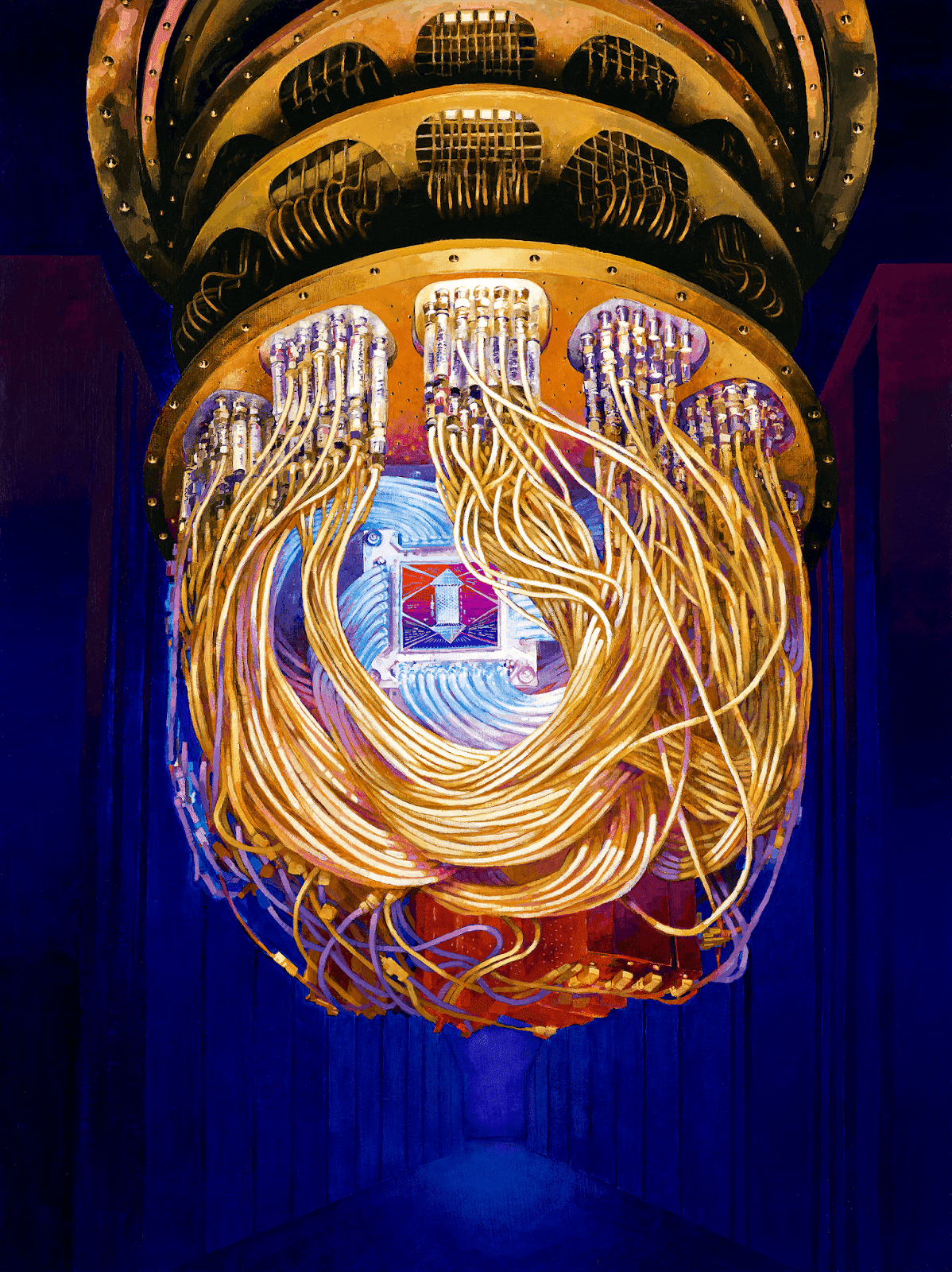

First, the current limitations on the power of quantum computing is set by two factors:

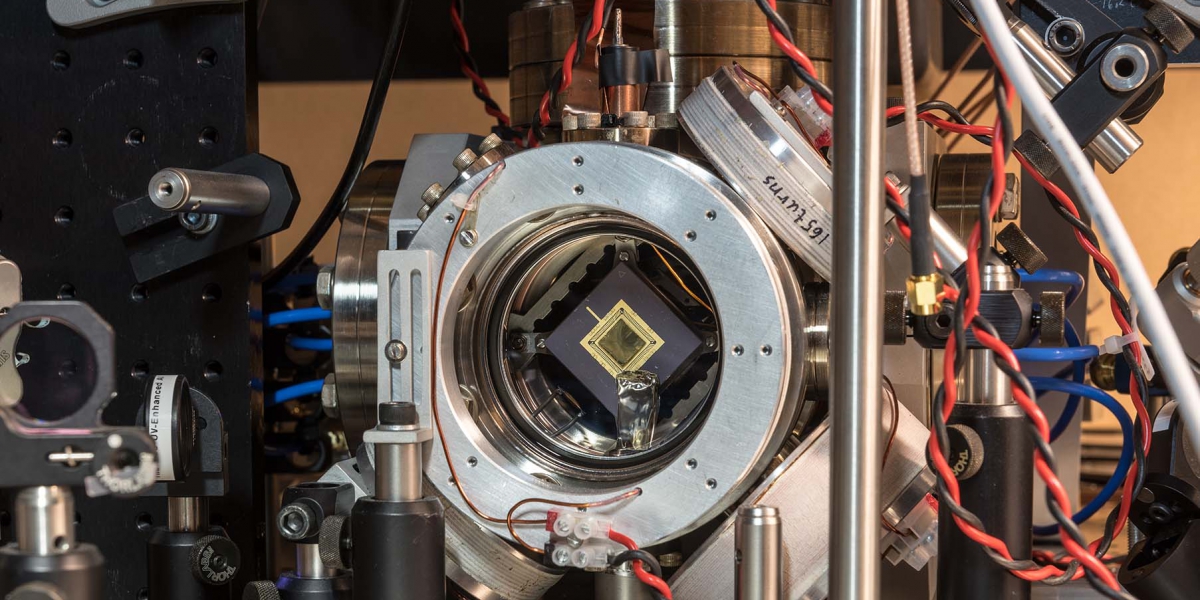

- the number of superconducting qubits that can be controlled at once by a quantum computer, which in turn limits the number of variables that can be processed in any one computation,

- and the power of quantum error-correction, as no quantum circuits are 100% reliable (they all introduce errors), and the errors you do have rise with both the time needed to complete your computation and also the number of qubits involved.

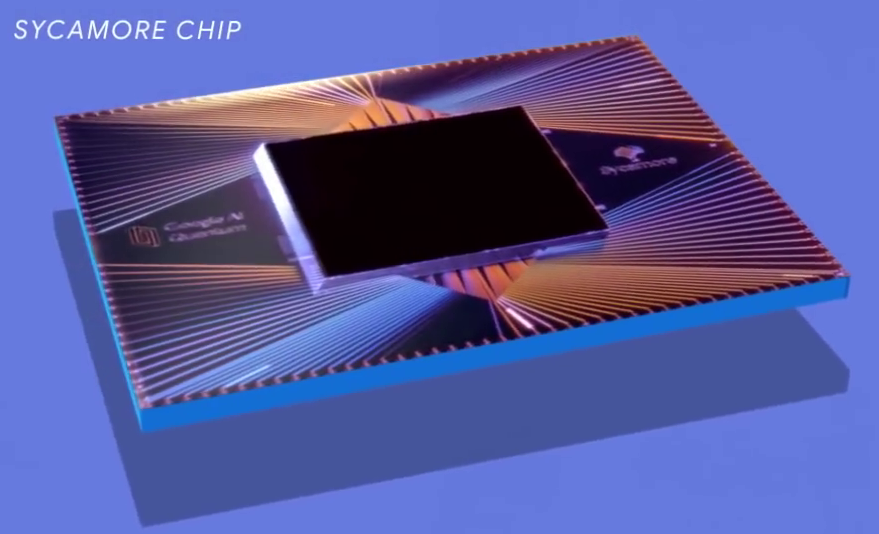

Therefore, if you want to achieve Quantum Supremacy (or its more ambitious cousin, Quantum Advantage), you’ll want to design a computational problem (or a useful computational problem) that requires only a small number of qubits, and that all the necessary computations can occur in a short time relative to the coherence time of the qubits involved.In 2019, Quantum Supremacy was demonstrated by a team at Google for a very specific problem: a problem with no real-world usefulness that was specifically designed to be extremely difficult to simulate on a classical computer, but that would be easy for a quantum computer. Although some still argue that a better classical algorithm will eventually allow this problem, and others like it, to be solved quickly on a classical computer, such arguments lack any demonstrable room-for-improvement to point to.

We are still, unfortunately, a long way from solving any useful problems much faster on a quantum computer than with a classical computer. There was a report last year, in Nature, that a traversable wormhole had been encoded onto a quantum processor and whose dynamics had been demonstrated using only 9 logical qubits: a purported demonstration of quantum advantage. Further analysis has revealed that the entire research effort was fundamentally flawed, and so it’s back to the drawing board as far as Quantum Advantage goes.

Almost every practical task you can imagine has little potential for Quantum Advantage, with classical computers performing much better in most cases. For example, let’s say you have a 20 digit semiprime number (a number that’s the product of two primes): there is no quantum computer that can solve this problem at all, whereas your off-the-shelf laptop can accomplish this in a matter of milliseconds.

However, incremental improvements are continuing to occur, with a new, more efficient quantum factoring algorithm offering a potential speed-up over using classical computers alone, and a novel quantum error-correction protocol offering the potential to preserve 12 logical qubits for 10 million syndrome cycles (a measure of needed error correction) with just 288 physical qubits, rather than the current need for 4000+ physical qubits using the standard (surface code) error-correction protocol. Someday, the cumulative combination of these and other advances will lead to the first robust demonstration of Quantum Advantage in a useful, practical system.

The ultimate goal of quantum computers, at least in the short-term, is to simulate quantum systems that are computationally expensive to simulate classically. That’s where the first practical application of Quantum Advantage is expected to arise, and it’s anyone’s guess whether the field of:

- materials science,

- high-energy physics,

- or quantum chemistry,

will be the first to reap the practical benefits of quantum computers. With greater numbers of superconducting qubits, longer coherence times for those qubits, and superior error-correction expected on the horizon, the computational power of quantum computers (including the number of logical qubits that can be used for computation) is steadily increasing. Eventually, the first practical, real-world problems that are computationally expensive for classical computers will be solved, quickly and efficiently, by quantum computers.

But no one should be under the illusion that quantum computers will someday replace classical computers for most applications, or that achieving Quantum Supremacy means that useful quantum computing has already arrived. Instead, we should expect our future to be a computationally hybrid one: where classical computers are at the root of most of our computational needs and are augmented by quantum computers in those arenas where Quantum Advantage can be achieved.

Still, just as there are many important physical phenomena that cannot be explained by the classical theories of Newton, Maxwell, or Einstein, there are many important computational problems that are awaiting the development of superior quantum computers. With Quantum Supremacy already achieved and the more practical Quantum Advantage on its way, we have so much to hope for as far as quantum computing is concerned, but so much hype — and many false claims — to simultaneously be wary of.

https://bigthink.com/starts-with-a-bang/quantum-supremacy-explained/