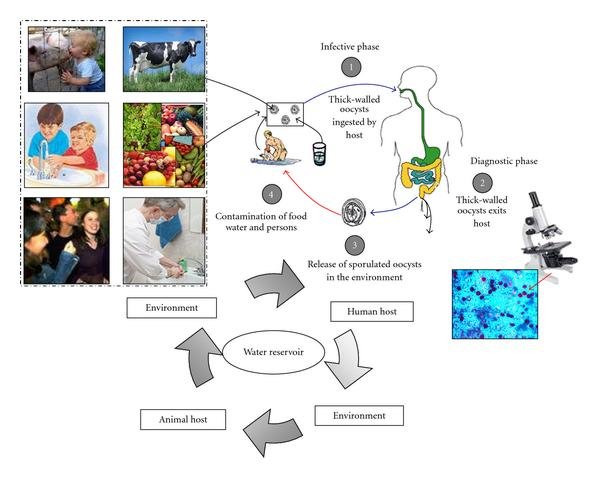

Description of transmission modes of Cryptosporidium. Following ingestion (and possibly inhalation) by a suitable host (e.g., human host), excystation occurs (infective stage, (1)). The released sporozoites invade epithelial cells of the gastrointestinal tract or other tissues, complete their cycle producing oocysts which exit host (diagnostic stage, (2)) and are released in the environment (3). Transmission of Cryptosporidium mainly occurs by ingestion of contaminated water (e.g., surface, drinking or recreational water), food sources (e.g., chicken salad, fruits, vegetables) or by person-to-person contact (community and hospital infections) (4). Zoonotic transmission of C. parvum occurs through exposure to infected animals (person-to-animal contact) or exposure to water (reservoir) contaminated by feces of infected animals (4). Putignani and Menchella, 2010.

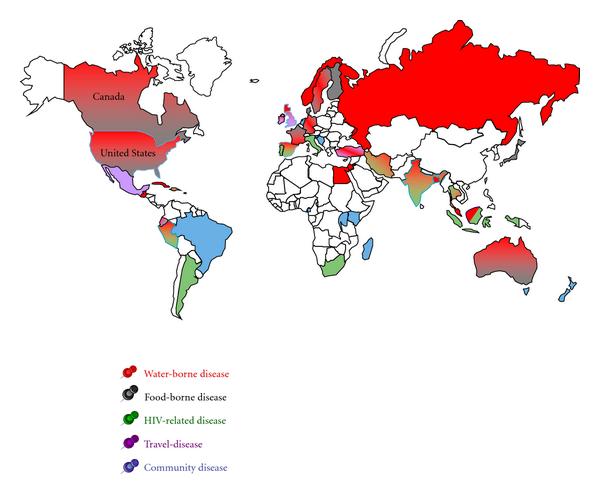

Geography of worldwide occurrence of human cryptosporidiosis outbreaks and sporadic cases. A color-coded distribution of the main cases of cryptosporidosis reported in the literature during the last decade (1998–2008) for the entire population (adults and children) is here represented. Waterborne and foodborne diseases are represented by red and grey color, respectively. Spreading of the infection due to HIV immunological impairment is represented by green and travel-related disease by pink color. When not applicable the definition of waterborne and foodborne disease, the term community disease has been applied to person-to-person contacts and represented by a pale blue color. For countries characterised by two or three coexisting transmission modes, a double color-filling effect plus thick border lines have been used, consistently with the above reported code. Putignani and Menchella, 2010.

Cryptosporidium spp. are coccidians, oocysts-forming apicomplexan protozoa, which complete their life cycle both in humans and animals, through zoonotic and anthroponotic transmission, causing cryptosporidiosis. The global burden of this disease is still underascertained, due to a conundrum transmission modality, only partially unveiled, and on a p…CiteDownload full-text Emerg Infect Dis . 2014 Apr;20(4):581-9. doi: 10.3201/eid2004.121415.

Large outbreak of Cryptosporidium hominis infection transmitted through the public water supply, Sweden

Micael Widerström, Caroline Schönning, Mikael Lilja, Marianne Lebbad, Thomas Ljung, Görel Allestam, Martin Ferm, Britta Björkholm, Anette Hansen, Jari Hiltula, Jonas Långmark, Margareta Löfdahl, Maria Omberg, Christina Reuterwall, Eva Samuelsson, Katarina Widgren, Anders Wallensten, Johan Lindh

- PMID: 24655474

- PMCID: PMC3966397

- DOI: 10.3201/eid2004.121415

Free PMC article

Abstract

In November 2010, ≈27,000 (≈45%) inhabitants of Östersund, Sweden, were affected by a waterborne outbreak of cryptosporidiosis. The outbreak was characterized by a rapid onset and high attack rate, especially among young and middle-aged persons. Young age, number of infected family members, amount of water consumed daily, and gluten intolerance were identified as risk factors for acquiring cryptosporidiosis. Also, chronic intestinal disease and young age were significantly associated with prolonged diarrhea. Identification of Cryptosporidium hominis subtype IbA10G2 in human and environmental samples and consistently low numbers of oocysts in drinking water confirmed insufficient reduction of parasites by the municipal water treatment plant. The current outbreak shows that use of inadequate microbial barriers at water treatment plants can have serious consequences for public health. This risk can be minimized by optimizing control of raw water quality and employing multiple barriers that remove or inactivate all groups of pathogens.

Keywords: Cryptosporidium hominis infection; cryptosporidiosis/epidemiology; cryptosporidiosis/prevention and control; cryptosporidiosis/transmission; diarrhea; disease outbreaks; drinking water; molecular typing; questionnaires; risk factors; waste management, parasites; water microbiology; water supply; waterborne infections. https://pubmed.ncbi.nlm.nih.gov/24655474/

——-

Cryptosporidium and Giardia in surface water and drinking water: Animal sources and towards the use of a machine-learning approach as a tool for predicting contamination

Author links open overlay panelPanagiotaLigdaabEdwinClaereboutaDespoinaKostopouloubAntoniosZdragasbStijnCasaertaLucy J.RobertsoncSmaragdaSotirakibaLaboratory of Parasitology, Faculty of Veterinary Medicine, Ghent University, Salisburylaan 133, B-9820, Merelbeke, BelgiumbLaboratory of Infectious and Parasitic Diseases, Veterinary Research Institute, Hellenic Agricultural Organization – DEMETER, 57001, Thermi, Thessaloniki, GreececParasitology, Department of Paraclinical Science, Faculty of Veterinary Medicine, Norwegian University of Life Sciences, PO Box 369 Sentrum, 0102, Oslo, Norway

Received 29 January 2020, Revised 16 April 2020, Accepted 6 May 2020, Available online 11 May 2020.

Show lessAdd to MendeleyShareCitehttps://doi.org/10.1016/j.envpol.2020.114766Get rights and content

Highlights

Cryptosporidium spp. and Giardia duodenalis were commonly found in surface waters.•

Hot spots and seasonal pattern of contamination identified.•

Parasite assemblages/species identified in water were the same as those in animals.•

Zoonotic species/assemblages of both parasites were identified in all matrices.•

Machine learning approaches revealed interactions with biotic/abiotic factors.

Abstract

Cryptosporidium and Giardia are important parasites due to their zoonotic potential and impact on human health, often causing waterborne outbreaks of disease. Detection of (oo)cysts in water matrices is challenging and few countries have legislated water monitoring for their presence. The aim of this study was to investigate the presence and origin of these parasites in different water sources in Northern Greece and identify interactions between biotic/abiotic factors in order to develop risk-assessment models. During a 2-year period, using a longitudinal, repeated sampling approach, 12 locations in 4 rivers, irrigation canals, and a water production company, were monitored for Cryptosporidium and Giardia, using standard methods. Furthermore, 254 faecal samples from animals were collected from 15 cattle and 12 sheep farms located near the water sampling points and screened for both parasites, in order to estimate their potential contribution to water contamination. River water samples were frequently contaminated with Cryptosporidium (47.1%) and Giardia (66.2%), with higher contamination rates during winter and spring. During a 5-month period, (oo)cysts were detected in drinking-water (<1/litre). Animals on all farms were infected by both parasites, with 16.7% of calves and 17.2% of lambs excreting Cryptosporidium oocysts and 41.3% of calves and 43.1% of lambs excreting Giardia cysts. The most prevalent species identified in both water and animal samples were C. parvum and G. duodenalis assemblage AII. The presence of G. duodenalis assemblage AII in drinking water and C. parvum IIaA15G2R1 in surface water highlights the potential risk of waterborne infection. No correlation was found between (oo)cyst counts and faecal-indicator bacteria. Machine-learning models that can predict contamination intensity with Cryptosporidium (75% accuracy) and Giardia (69% accuracy), combining biological, physicochemical and meteorological factors, were developed. Although these prediction accuracies may be insufficient for public health purposes, they could be useful for augmenting and informing risk-based sampling plans.

Graphical abstract

https://www.sciencedirect.com/science/article/abs/pii/S026974912030676X

——-

Global Issues in Water, Sanitation, and Health: Workshop Summary.

2Lessons from Waterborne Disease Outbreaks

OVERVIEW

This chapter is comprised of three case studies of waterborne disease outbreaks that occurred in the Americas. Each contribution features an outbreak chronology, an analysis of contributing factors, and a consideration of lessons learned. Together, they illustrate how an intricate web of factors—including climate and weather, human demographics, land use, and infrastructure—contribute to outbreaks of waterborne infectious disease.

The chapter begins with an account of the massive cholera epidemic that began in urban areas of Peru in 1991 and swept across South America by Carlos Seas and workshop presenter and Forum member Eduardo Gotuzzo, of Universidad Peruana Cayetano Heredia and Hospital Nacional Cayetano Heredia in Lima, Peru. The authors describe current understanding of the role of Vibrio cholerae in marine ecosystems, and consider how climatic and environmental factors, as well as international trade, may have influenced the reintroduction of this pathogen to the continent after nearly a century’s absence. The epidemic persisted for five years, then reappeared, with diminshed intensity, in 1998. While attempts to control the epidemic through educational campaigns aimed at improving sanitation were unsuccessful in the short term, Seas and Gotuzzo report that, following a significant investment in sanitation in the wake of this public health disaster, transmission rates of other waterborne infectious diseases, including typhoid fever, declined in Peru. They note that, by understanding the ecology of V. cholerae, researchers may be able to predict relative risk for pathogen transmission from marine environments and thereby aid efforts at preventing epidemics.

In 1993, two years after cholera struck Peru, an epidemic of cryptosporidiosis in Miluwaukee, Wisconsin, sickened hundreds of thousands of people and caused at least 50 deaths, demonstrating that even “modern” water treatment and distribution facilities are vulnerable to contamination by infectious pathogens. In their contribution to this chapter, workshop presenter Jeffrey Davis and coauthors recount their investigation of this outbreak, which resulted from the confluence of multiple and diverse environmental and human factors. Based on lessons learned from their discoveries, the authors made—and authorities undertook—recommendations to prevent further outbreaks in the Milwaukee water system, resulting in significant improvements in water quality. Their findings have proven applicable to other water treatment facilities that share Lake Michigan and have received attention from water authorities worldwide.

The final paper in this chapter, by workshop presenter Steve Hrudey and Elizabeth Hrudey of the University of Alberta, Canada, discusses an episode of bacteria contamination of the water in Walkerton, Ontario, in 2000. The outbreak sickened nearly half of the town’s 5,000 residents and caused 7 deaths, as well as 27 cases of hemolytic uremic syndrome, a severe kidney disease. Several incidents of human error and duplicity figure prominently among the causes of this entirely preventable outbreak, the authors explain. “Because outbreaks of disease caused by drinking water remain comparatively rare in North America,” they conclude, “complacency about the dangers of waterborne pathogens can easily occur.” Based on their findings, they present a framework for water system oversight intended to save other communities from Walkerton’s fate.Go to:

THE CHOLERA EPIDEMIC IN PERU AND LATIN AMERICA IN 1991: THE ROLE OF WATER IN THE ORIGIN AND SPREAD OF THE EPIDEMIC

Carlos Seas, M.D. Universidad Peruana Cayetano Heredia Eduardo Gotuzzo, M.D., FACP Universidad Peruana Cayetano Heredia

At Athens a man was seized with cholera. He vomited, and was purged and was in pain, and neither the vomiting nor the purging could be stopped; and his voice failed him, and he could not be moved from his bed, and his eyes were dark and hollow, and spasms from the stomach held him, and hiccup from the bowels. He was forced to drink, and the two (vomiting and purging) were stopped, but he became cold.

Hippocrates

After an absence of almost one century, cholera reappeared in South America in Peru during the summer of 1991. This event was totally unexpected by the scientific community, which had anticipated the spread of cholera to the continent from Africa and had hypothesized its introduction by Brazil following well-recognized routes of dissemination of the disease that involve trade and commerce. The further spread of the epidemic was very rapid; all Peruvian departments had reported cholera cases in less than six months; almost all Latin American countries, with the exception of Uruguay, had reported cases within one year of the beginning of the epidemic. The chains of events that triggered and disseminated the epidemic into the continent have not been fully elucidated, but evidence is being gathered on the possible role of marine ecosystems, climate and environmental factors, and the pivotal role of water. We discuss here the evidence in support of water’s role in cholera dynamics.

The Environmental Life Cycle of Vibrio cholerae

The natural reservoirs of V. cholerae are aquatic environments, where O1 and non-O1 serogroups coexist. V. cholerae survives by attaching to and forming symbiotic associations with algae or crustacean shells (Figure 2-1). In these environments, V. cholerae multiplies and can persist for years in a free-living cycle without human intervention, as it has been elegantly described by Dr. Colwell and her associates at the International Centre for Diarrheal Diseases Research in Dhaka, Bangladesh (Colwell et al., 1990).

FIGURE 2-1

Vibrio cholerae O1 attached to a copepod. SOURCE: Courtesy of Rita Colwell, Ph.D., University of Maryland.

A number of environmental factors modulate the abundance of Vibrio, including, but not limited to, temperature, pH, salinity, and nutrient availability. Under adverse conditions, V. cholerae survives in a dormant state with all metabolic pathways shut down, which can be reactivated again when suitable conditions return. Additionally, V. cholerae can produce biofilms—surface-associated communities of bacteria with enhanced survival under negative conditions—which can switch to active bacteria and induce epidemics.

The ability of V. cholerae to regulate its metabolism based on the environmental conditions of its natural reservoir may explain the endemicity of cholera in many parts of the world. During the cholera epidemic in Peru, V. cholerae was isolated from many aquatic environments, including not only marine ecosystems, but riverine and lake environments. Even one of the highest commercially navigable freshwater lakes in the world, Lake Titicaca, located 3,827 meters above sea level on the border of Peru and Bolivia, was impacted by the cholera epidemic of 1991.

Humans are only temporary reservoirs of V. cholerae. Interestingly, lytic phages modulate the abundance of V. cholerae in the human intestine, but on the other hand, V. cholerae are able to up-regulate certain genes in the intestine of humans resulting in a short-time hyperinfectious state. As illustrated in Figure 2-2, V. cholerae is introduced to humans from its aquatic environment through contamination of food and water sources.

FIGURE 2-2

A hierarchical model for cholera transmission. SOURCE: Reprinted from Lipp et al. (2002) with permission from the American Society for Microbiology.

The Origins of the Latin American Epidemic

The Latin American cholera epidemic was officially declared in Peru during the third week of January 1991, almost simultaneously in three cities along the north coastal area of the country. By the end of that year, almost 320,000 cases had been officially reported to the Pan American Health Organization by the Peruvian Ministry of Health. Nearly 45,000 cases occurred every week, in what was considered the worst cholera epidemic of the century in Peru (Gotuzzo et al., 1994). There were several distinctive features of this epidemic:

- Very high attack rates were reported soon after the epidemic started.

- Cholera accounted for almost 80 percent of all acute diarrhea cases in the country irrespective of the degree of dehydration and age group.

- The epidemic was initially concentrated in urban areas, where it spread very rapidly, suggesting a common source of dissemination.

- Transmission was halted in very few areas, where treatment and chlorination of municipal water was possible, suggesting a critical role of water in the transmission of the disease.

- Very low case-fatality rates were reported from urban areas where patients had access to treatment by well-trained health personnel, but higher figures were reported from isolated communities where patients did not have access to health centers, a situation similar to those reported from Africa in refugee settings under political instability.

Although the epidemic spread to neighboring countries, it never reached the magnitude seen in Peru, which suffered that year from serious economic constraints and reported the lowest level of sanitary coverage and sanitary investment in the region. During 1991, approximately 50 percent of the population in urban cities of Peru received treated municipal water; intermittent supply and clandestine connections were common in many cities of the country (Figure 2-3). Additionally, less than 10 percent of sewerage water was treated properly. These conditions prevailed before the beginning of the epidemic and were responsible for its very rapid spread. The epidemic lasted for five years until 1995, only to reappear again in 1998 with much less intensity, as shown in Figure 2-4 (WHO, 2008). The message conveyed to the population at the beginning of the epidemic to curtail transmission focused on avoiding eating raw fish and shellfish and to boil water for drinking purposes.

FIGURE 2-3

A shantytown in Peru during 1991. SOURCE: Instituo de Medicina Tropical Alexander von Humboldt, Lima, Peru.

FIGURE 2-4

Cholera in the Americas, 1991–2006. SOURCE: Based upon data compiled from and reported in the WHO’s Weekly Epidemiological Record.

Massive investment in sanitation followed the epidemic, which was responsible for a reduction in transmission not only of cholera but also of other enteric infections, such as numerous parasitic infections and typhoid fever. The case of typhoid fever deserves special mention. Many experienced doctors in Lima saw a marked reduction in the incidence of typhoid fever in their practices as a consequence of improvements in sanitation and hygiene, a situation that was also seen at our Institute (Figure 2-5).

FIGURE 2-5

Typhoid fever cases seen at the Alexander von Humboldt Tropical Medicine Institute in Lima, Peru, 1987–1993.

Before 1990, typhoid fever was responsible for the majority of episodes of undifferentiated fever lasting at least five days in Lima. Approximately 70 to 100 patients with complicated typhoid fever were hospitalized yearly in our institution the decade before the cholera epidemic; these figures were reduced tenfold after 1991. The reduction in typhoid fever incidence was so dramatic that the disease is almost unknown by the generation of physicians trained after 1991, with the subsequent delay in diagnosis and development of complications, an unthinkable situation the decade before 1990.

Still, a question remains unanswered: From where did this huge cholera epidemic originate? Although both nontoxigenic O1 and non-O1 V. cholerae strains had been isolated from environmental sources and from patients in Peru and other countries in the region, the hypothesis that suggested that these Vibrio became residents in aquatic environments of coastal Peru with further acquisition of virulence genes that mediated for toxigenic expression through phage infection seems unlikely. Additionally, genetic comparison of the Vibrio responsible for the epidemic; V. cholerae O1 serotype Inaba and biotype El Tor, with endemic agents in Asia, disclosed very similar patterns, suggesting common ancestors or spread from one place to another. The latter option seems more reasonable. Another hypothesis suggests that V. cholerae was seeded into the marine ecosystems of northern Peru a few months before the epidemic started, which seems more likely in light of what was discussed earlier—that Vibrio was imported from Asia transported by crew ships, or emptied from vessels discharging bilge water contaminated with the bacterium.

From its aquatic environment Vibrio was first amplified along the north coast and then introduced almost simultaneously into several cities of the country (Figure 2-6). This hypothesis was proposed after analyzing data generated by a retrospective study that reviewed charts of patients who had attended several hospitals along the Peruvian North Coast in 1989 and 1991, and disclosed that seven patients fulfilled the clinical definition of cholera proposed by the World Health Organization three months before the epidemic had started (Seas et al., 2000). These adult patients attended with severe dehydration and watery diarrhea, clinical presentation that had not been at these health centers the year before the epidemic. Although no convincing evidence proves definitively that these cases were due to cholera (clinical laboratory cultures had not been obtained for these cases), the clinical presentation is similar to that described in other epidemic areas for cholera, and also similar to that which many Peruvian doctors subsequently saw (Figure 2-7).

FIGURE 2-6

The seventh cholera pandemic. SOURCE: Carlos Seas. Cólera. Medicina Tropical. CD-ROM. Version 2002. Instituto de Medicina Tropical, Príncipe Leopoldo. Amberes, Bélgica. Instituto (more…)

FIGURE 2-7

Location of patients in Peru with presumed cholera, identified before the epidemic of 1991. SOURCE: Reprinted from Seas et al. (2000) with permission from the American Journal of (more…)

Which Forces Drove the Spread of Vibrio into the Pacific Coastal Areas of Peru?

Dr. Rita Colwell’s theory on the environmental niche for V. cholerae in aquatic ecosystems is crucial for understanding cholera dynamics. Factors that modify the survival of Vibrio in the environment may dramatically influence cholera transmission. Climate change and climate variability are among these critical forces. While the association between climate, environmental factors, and cholera transmission had been proposed a long time ago, the role of climate in cholera dynamics has been better elucidated in recent years.

Time series analysis has demonstrated a relationship between the appearance of cholera cases in Bangladesh and occurrence of El Niño-Southern Oscillation (ENSO). Observations have linked the interannual variability of ENSO with the proportion of cholera cases in Dhaka, Bangladesh. Additionally, climate variability due to ENSO and temporary immunity explained the interannual cycles of cholera in rural Matlab, Bangladesh, for a period of almost 30 years (Pascual et al., 2000). The net effect of ENSO—rise in both sea water temperature and planktonic mass—modifies the abundance of V. cholerae in the environment by affecting the concentration of plankton to which V. cholerae is attached and affects the concentration of nutrients and salinity.

Water temperature affects cholera transmission, as has been observed in the Bay of Bengal, Bangladesh. All these data support the role of ENSO in the interannual variability of endemic cholera. An unproven hypothesis suggests that El Niño triggered the epidemic of cholera in Peru in 1991 by amplifying the planktonic mass and dispersing existing Vibrio along the north coast of the country. Then Vibrio was introduced into the continent by contaminated food and subsequently by contamination of the water supply system (Seas et al., 2000).

Several studies conducted in Peru after 1991 have shown an association between warmer air temperatures and cholera cases in children and adults. Additionally, toxigenic V. cholerae O1 has been isolated from aquatic environments in the coastal waters of Peru, suggesting that it has been successful in adapting to these environments, as has been described in Bangladesh and India. These findings support the theory of an environmental niche for V. cholerae O1 in Latin America and temporal associations between ENSO and cholera outbreaks from 1991 onward (Salazar-Lindo et al., 2008).

The Spread of the Cholera Epidemic in Peru as a Model to Understand Transmission in the Region

As illustrated earlier in Figure 2-3, the cholera transmission cycle involves infection of humans by the consumption of contaminated food and water and further shedding of the bacteria into the environment via contaminated stools. Incredibly high attack rates accompany human infection under favorable conditions, especially in previously nonexposed populations. Very high household transmission rates also occur.

Transmission via contaminated water and food has been long recognized. During the Latin American epidemic, acquisition of the disease by drinking contaminated water from rivers, ponds, lakes, and even tube well sources were documented. Contamination of municipal water was the main route of cholera transmission in Trujillo, Peru, during the epidemic in 1991. Drinking unboiled water, introducing contaminated hands into containers used to store drinking water, drinking beverages from street vendors, drinking beverages when contaminated ice had been added, and drinking water outside the home are recognized exposure risk factors for cholera. In addition to the crucial role of water in the transmission of cholera, poor hygienic conditions also contribute to the spread of cholera by exposing susceptible persons to the pathogen. Educational campaigns were implemented throughout the country with little effect in the short term.

Certain host factors may have played a role in the transmission of cholera. Infection by Helicobacter pylori, the effect of the O blood group, and the protective effect of breast milk deserve to be mentioned. Studies from Bangladesh and Peru show that people infected by H. pylori are at higher risk of acquiring cholera than people not infected by H. pylori (León-Barúa et al., 2006). Additionally, the risk of acquiring severe cholera among people coinfected with H. pylori is higher in patients without previous contact with V. cholerae, as measured by the absence of vibriocidal antibodies in the serum (Clemens et al., 1995).

H. pylori is highly endemic in developing countries, particularly among low-income status individuals. Infection causes a chronic gastritis that induces hypochlorhydria, which in turn reduces the ability of the stomach to limit the Vibrio invasion. Patients carrying the O blood group, which is widespread in Latin America, have a higher risk of developing severe cholera. Higher affinity of the cholera toxin to the ganglioside receptor in patients with O blood group and lower affinity in patients of A, B, and AB blood groups may explain this association. Finally, the protective effect of breast milk, possibly mediated by a high concentration of secretory IgA anti-cholera toxin, has been proposed.

Preventing Future Epidemics

The scarce number of autochthonous cases reported from developed countries, such as the United States and Australia where Vibrio cholerae O1 is a resident of aquatic environments, provides additional support for the well-known concept that hygiene and sanitation can control cholera transmission. These relatively simple measures are very difficult to implement in the developing world (Zuckerman et al., 2007).

Alternative ways to prevent cholera transmission have been explored, including but not limited to the boiling and/or chlorination of water, exposing water to sunlight, filtering water using Sari cloth, and educating the population at risk on appropriate hygienic practices (Colwell et al., 2003). Using new information generated from the studies that delineated the ecological niche of Vibrio may help in predicting the onset of an epidemic, which may have a tremendous impact on prevention. Searching for V. cholerae O1 in municipal sewage and environmental samples in endemic areas could be used as a warning signal of future epidemics (Franco et al., 1997), and monitoring the movement and abundance of plankton by satellite seems attractive, but more studies are needed to support the implementation of these methods.

Conclusions

The cholera epidemic in Latin America was characterized by an explosive beginning with rapid spread in urban areas of Peru and other poor neighboring countries. The available information suggests that environmental factors amplified the existing Vibrio population and induced an epidemic, which was further amplified by contamination of municipal water and food. Water played a key role not only in maintaining Vibrio in its natural reservoir but also in disseminating the epidemic.

Acknowledgments

We would like to express our most sincere gratitude to Dr. Rita Colwell and Dr. Bradley Sack for sharing with us valuable information and images that were reproduced in this manuscript.Go to:

LESSONS FROM THE MASSIVE WATERBORNE OUTBREAK OF CRYPTOSPORIDIUM INFECTIONS, MILWAUKEE, 1993

Jeffrey P. Davis, M.D. Wisconsin Division of Public Health William R. Mac Kenzie, M.D. Centers for Disease Control and Prevention David G. Addiss, M.D., M.P.H. Fetzer Institute

The Investigation Begins

On Monday, April 5, 1993, the City of Milwaukee Health Department (MHD) received reports of increased school and workplace absenteeism due to diarrheal illness in Milwaukee County, Wisconsin. This appeared to be quite widespread, particularly on the south side of the city. In one particular hospital, during the previous weekend, more than 200 individuals had been cultured for bacterial enteric pathogens. The hospital ran out of bacterial culture media, yet none of the patients tested positive for bacterial enteric pathogens. Routine tests for ova and parasites done on many stools did not reveal pathogens. Pharmacies were experiencing widespread shortages of antidiarrheal medications. Because of the clinical profile of illness and the apparent magnitude of the outbreak, we considered this outbreak to be due to a product with wide local distribution with drinking water being the most likely vehicle. The Wisconsin Division of Health (now the Wisconsin Division of Public Health [DPH]) offered onsite assistance to investigate and control this outbreak. The offer was accepted; lead staff arrived on April 6 and additional team members arrived on April 7.

The Director of the Bureau of Laboratories, MHD, requested and received water quality and treatment data from the Milwaukee Water Works (MWW). While preliminarily reviewing these data, he noted striking spikes in turbidity of water treated in one of the two MWW treatment plants (the southern plant), and these spikes in finished water turbidity occurred on multiple days in late March and early April. This was reminiscent of a large waterborne outbreak of Cryptosporidium infections in Carrollton, Georgia, that occurred among customers of a municipal water supply (Hayes et al., 1989). On April 6, following discussion with DPH staff, the laboratory director selected some representative stool specimens among those that had tested negative for enteric pathogens and tested them for protozoan parasites including Cryptosporidium, which initially had not been done in the clinical laboratories. Early on April 7, DPH, MHD, and Wisconsin Department of Natural Resources staff met with MWW officials. By late afternoon on April 7, positive results for Cryptosporidium were reported found in stool specimens from three adults conducted by the MDH laboratory, and stools from five adults tested at other Milwaukee laboratories. These adults resided at widespread locations in southern Milwaukee and one neighboring municipality within Milwaukee County. Following a meeting with city and state public health and water treatment officials, Milwaukee’s mayor, John Norquist, issued a boil water advisory on the evening of April 7. The outbreak received considerable media attention for more than two weeks. Inordinate numbers of people were inconvenienced. Pharmacies continued to sell a lot of antidiarrhea medications. Many industries with processes dependent on treated water were challenged.

The city of Milwaukee occupies most of Milwaukee County. Three rivers—the Milwaukee, the Menomonee, and the Kinnickinnic—flow through the county and converge in the city, where they empty into Lake Michigan within a breakfront; the ambient flow of the river water entering the lake is southerly (Figure 2-8). Figure 2-8 also shows the location of the two MWW water treatment plants: the northern plant received water by gravity flow through an intake 1.2 miles offshore, and the southern plant received water by gravity flow through an intake 1.4 miles offshore.

FIGURE 2-8

Location of the three rivers that flow through Milwaukee County, Wisconsin, the breakfront protecting the city of Milwaukee harbor, and the northern and southern Milwaukee Water Works water treatment (more…)

Figure 2-9, which depicts daily turbidity values for treated water at both plants during March and April 1993, demonstrates several spikes in treated water turbidity at the southern plant (Mac Kenzie et al., 1994b). The first peak, which occurred on or about March 23, was the largest recorded at the southern plant in more than 10 years. It was followed by a considerably larger, sustained peak with maximum turbidity measurements on March 28 and 30, and another peak on April 5. The southern plant was closed on April 7, but the water there was sampled on April 8 (Mac Kenzie et al., 1994b).

FIGURE 2-9

Maximal turbidity of treated water in the northern and southern water treatment plants of the Milwaukee Water Works from March 1 through April 28, 1993. NTU denotes nephelometric turbidity units. (more…)

At the time of the outbreak, not much was known about Cryptosporidium in water. The pathogen had first been documented in humans in 1976 (Meisel et al., 1976; Nime et al., 1976), and by the early 1980s, was recognized as an AIDS-defining illness (Current et al., 1983). There had been several water-associated outbreaks in the United States and in the United Kingdom prior to the Milwaukee event, although most were associated with surface water contamination (D’Antonio et al., 1985; Gallagher et al., 1989; Joseph et al., 1991; Leland et al., 1993; Richardson et al., 1991). The 1987 outbreak in Carrollton, Georgia, affected an estimated 13,000 customers of a municipal water supply, but it was not associated with high turbidity of treated water (Hayes et al., 1989).

To evaluate for other microbiologic etiologies for the Milwaukee outbreak we reviewed the results of laboratory examinations of stool samples conducted in 14 different local laboratories between March 1 and April 16, 1993 (Mac Kenzie et al., 1994b). No increase in bacterial enteric pathogens was found. Prior to recognition of the outbreak, between March 1 and April 6, only 42 Cryptosporidium tests (nearly all of them on samples from patients with HIV/AIDS) were conducted, but after the outbreak was recognized more than 1,000 Cryptosporidium tests were conducted in a seven-day period. During both intervals, nearly one-third of these samples tested positive for Cryptosporidium (Mac Kenzie et al., 1994b). The percentage of positive Cryptosporidium tests, although not as high as one might expect in an outbreak, were similar to the rates of positive tests noted during the Carrollton event (39 percent). We believe that these results reflect the limits of standard microbiologic testing for Cryptosporidium available at that time.

Thus, early in our investigation we established that Cryptosporidium was the most likely cause of the outbreak and hypothesized that treated water from the southern water treatment plant was the vehicle for the majority of human infections associated with this outbreak. In addition to the primary task of testing this hypothesis, there were many tasks and questions we sought to address, which included determining

- the magnitude and timing of cases associated with the outbreak,

- the spectrum of clinical symptoms experienced in a large population of persons infected with Cryptosporidium,

- the incubation period of cryptosporidiosis following exposure,

- the timing of contamination of Milwaukee water,

- the secondary attack rate of cryptosporidiosis among family members not exposed to Milwaukee water,

- the frequency of recurrence of the symptoms of cryptosporidiosis after initial recovery,

- the presence of Cryptosporidium oocysts in Milwaukee water in water archived during the time of putative exposure of Milwaukee residents,

- factors at the MWW southern water treatment plant that allowed Cryptosporidium oocysts to pass through in treated water to infect the public,

- mortality associated with the outbreak,

- the frequency of asymptomatic infection among exposed Milwaukee residents, and

- the ultimate source of these Cryptosporidium oocysts: animals or humans.

To develop an epidemiologic case definition of cryptosporidiosis, we compared people with laboratory-confirmed infections with those who had clinical diagnoses (Mac Kenzie et al., 1994b). The age and gender profiles of these two patient classes were similar, although laboratory-confirmed cases were skewed, as one might expect, toward more serious illness. There was a uniform occurrence of diarrhea/watery diarrhea in all cases. Cramps, fatigue, muscle aches, vomiting, and fever occurred more frequently in the laboratory-tested individuals. Temporal distribution of the two patient classes was virtually identical (Mac Kenzie et al., 1994b).

Rapid Hypothesis Testing—Nursing Home Study

To rapidly test the hypothesis that the southern water treatment plant was the likely source of the outbreak, we examined rates of diarrhea among geographically fixed populations—residents of nursing homes—in different parts of Milwaukee (Mac Kenzie et al., 1994b). Due to their relative geographic location, nine nursing homes received drinking water primarily from the north plant; seven received water primarily from the south plant. Information on diarrhea was collected routinely at these nursing homes, so we were able to review their logs to establish the rate of diarrhea (defined as three or more loose stools per 24-hour period).

We found a spike in diarrheal illness peaking between April 1 and 6 among nursing home residents served by the south water plant. High rates of diarrhea continued into the following week and returned to baseline by April 19 (Mac Kenzie et al., 1994b). By contrast, diarrhea rates at nursing homes served by the north water plant remained at baseline throughout March and April. Importantly, the one nursing home in the south that obtained its water from a well had no increase in diarrhea rates. We tested stools from 69 nursing home residents with diarrhea from the south, and 12 from the north, for Cryptosporidium. Thirty-five (51 percent) of the southern samples were positive, but every northern sample was negative (Mac Kenzie et al., 1994b).

Magnitude and Impact of the Milwaukee Outbreak— Random Telephone Survey

To assess the magnitude of this outbreak, we conducted a random telephone survey of 840 households in Milwaukee and in the four surrounding counties, asking about the number of cases of watery diarrhea experienced between March 1 and April 28 (Mac Kenzie et al., 1994b). The response rate was 73 percent, and included 1,663 household members whose demographic features closely tracked 1990 census data. Among this sample, 436 (26 percent) were reported to have had watery diarrhea during the survey period, with the peak number of cases occurring during April 3 through April 5. As may be seen in Figure 2-10, the attack rate among MWW customers whose homes were served principally by the northern plant was 26 percent, compared with 52 percent of those from homes served principally by the southern plant. Residents receiving a mixture of water from both plants had an intermediate attack rate of 35 percent (Mac Kenzie et al., 1994b).

FIGURE 2-10

Rate of watery diarrhea from March 1 through April 28, 1993, among respondents in a random-digit telephone survey of households in the five county Greater Milwaukee area, by Milwaukee Water Works (more…)

In our survey of Milwaukee and the four surrounding counties there was an overall attack rate of watery diarrhea of 26 percent. Applying this to the population of the five-county area, and subtracting a background rate of 0.5 percent, we estimated that 403,000 residents had watery diarrhea associated with this outbreak (certainly other people outside the survey area had also become ill, but we could not estimate their numbers; Mac Kenzie et al., 1994b). In our survey, 11 percent of people with watery diarrhea visited health-care providers (44,000 estimated visits) and 1.1 percent were hospitalized (4,400 estimated hospitalizations).

Approximately 1.8 days of productivity (school or work) were lost per case patient, which projects to 725,000 person-days lost, including about 479,000 person-days among those in the workforce (ages 18 to 64 years; Mac Kenzie et al., 1994b). Based mainly on review of death certificate data, we attributed 69 deaths to this outbreak; most of these were premature deaths among people with AIDS/HIV infection (Hoxie et al., 1997).

Study of Short-Term Visitors—Determining the Timing of Exposure, Incubation Period, and Frequency of Secondary Transmission

We studied short-term visitors to the Milwaukee area to answer the following questions:

- When was Cryptosporidium present in the water system?

- How long was the incubation period?

- How frequent was secondary (person-to-person) transmission?

Specifically, we studied people who visited the five-county Milwaukee area one time between March 15 and April 15, unaccompanied by other members of their households (Mac Kenzie et al., 1995b). We identified 94 such individuals who had stayed in the area less than 48 hours and had either laboratory-confirmed cryptosporidiosis (n = 54) or clinical cryptosporidiosis (n = 40) following their visit. Two-thirds of these visitors had stayed for less than 24 hours, and all had drunk beverages that contained unboiled tap water (the median amount consumed was 16 ounces while in Milwaukee; 32 percent of these ill visitors drank less than eight ounces) (Mac Kenzie et al., 1995b).

We examined the dates of arrival in Milwaukee and the dates of illness onset among the 94 brief interval visitors, as shown in Figure 2-11. Using dates of arrival as data of initial exposure, oocysts were presumed present in the treated water during 13 consecutive days (March 24 through April 5). By subtracting the date of arrival from the date of illness onset for each ill visitor, incubation periods could be calculated and the median incubation period was 7 days (range: 1–14 days). Diarrhea abated only to recur in 39 percent of visitors with laboratory-confirmed infection as it had in Milwaukee residents, suggesting that recurrence of diarrhea was not due to reinfection (Mac Kenzie et al., 1995b).

FIGURE 2-11

(A) Dates of arrival and (B) dates of onset of illness for 54 persons with laboratory-confirmed Cryptosporidium infection (black bars) and 40 persons with clinically defined (more…)

To determine the rate of secondary household transmission, we looked at nonvisiting members of the 94 visitors’ households. We surveyed 74 people who fit this description, of whom 5 percent experienced watery diarrhea; thus, we concluded that the rate of secondary household transmission was quite low (Mac Kenzie et al., 1995b).

To evaluate for the presence of Cryptosporidium oocysts in the public water supply earlier in the outbreak, we needed to identify a large quantity of archived water. We obtained large blocks of ice for sculpture made by one southern Milwaukee ice manufacturer. Because of visible impurities the ice blocks frozen on specific days could not be used as intended, but fortunately they had been saved. We sampled melted water from these ice blocks made with water coming from the southern treatment plant. These blocks were available for two different days of manufacture around the time of the outbreak (March 25 and April 9; Addiss et al., 1995; Mac Kenzie et al., 1994b). To gauge Cryptosporidium oocyst levels in the water, we melted the blocks from each production day and separated each of the respective samples into aliquots, which were filtered to recover oocysts using a peristaltic pump and two different kinds of filters: a 0.45-micron (absolute pore size) membrane filter (one aliquot for each production day), and a 1.0-micron (nominal pore size) spun polypropylene cartridge (the other aliquot for each production day). At the time, polypropylene cartridges were the standard filtration technique to determine the number of oocysts per liter in raw or finished water; membrane filters were “cutting edge,” but these proved to be a much more sensitive means of detecting oocysts. Using the membrane filter, we detected 13.2 oocysts per 100 liters of melted ice from March 25 (before the peak in turbidity), and 6.7 per 100 liters on April 9 (after the boil-water advisory was invoked; Mac Kenzie et al., 1994b). While quite elevated, these likely underestimate concentrations originally in the water because freezing disrupts Cryptosporidium oocysts. The median infectious dose of C. parvum among healthy adult volunteers with no serologic evidence of past infection is 132 oocysts (Du Pont et al., 1995). More recently, the 50 percent infectious dose (ID50) of C. hominis among healthy adult volunteers with no serologic evidence of past infection was estimated to be 10 oocysts using a clinical definition of infection and 83 oocysts using a microbiologic definition (Chappell et al., 2006).

A Confluence of Events and Contributing Factors Leading to This Outbreak and the Investigation of the Milwaukee Water Treatment Plants

We collected considerable data regarding the operation of the two MWW plants and operating conditions during March and April 1993. Figure 2-12 depicts the water treatment process used by the MWW in 1993 in both treatment plants.

FIGURE 2-12

Depiction of the water treatment process used in the northern and southern Milwaukee Water Works water treatment plants in early 1993.

Raw water was introduced by gravity and rapidly mixed with chlorine for disinfection and a coagulant for mechanical flocculation. Following sedimentation, the water was rapidly filtered through sand-filled filtration beds (16 in the north plant and 8 in the south plant) and then stored in a large clear well prior to entry into water distribution pipes (Addiss et al., 1995; Mac Kenzie et al., 1994b). The capacity for producing treated water in each plant was substantial; if one plant was shut down, the full catchment area would still be fully served by the other plant remaining in operation.

In September 1992, both plants changed the type of coagulant used, from the venerable alum to polyaluminum chloride. This was done in response to concern that lead and copper might leach from the aging water distribution infrastructure if the pH was too low, which was more likely to occur if the alum coagulant was used (Addiss et al., 1995).

From January 1983 to January 1993, the turbidity of treated water at the southern plant did not exceed 0.4 nephelometric turbidity unit (NTU). From February to April 1993, the turbidity of treated water at the southern plant did not exceed 0.25 NTU until March 18, when it increased to 0.35 NTU. From March 23 to April 1, the maximal daily turbidity of treated water was consistently 0.45 NTU or higher, with peaks of 1.7 NTU on March 28 and 30, despite an adjustment of the dose of polyaluminum chloride (Figure 2-13; Mac Kenzie et al., 1994b). Although marked improvement in the turbidity of treated water had been achieved by April 1 with the use of polyaluminum chloride, on April 2 the southern plant resumed use of alum instead of polyaluminum chloride as a coagulant. On April 5, the turbidity of treated water increased to 1.5 NTU. During February through April 1993, the northern plant treated water turbidity did not exceed 0.45 NTU (Mac Kenzie et al., 1994b). There was no correlation between the turbidity of treated water and the turbidity or temperature of untreated water. From February through April 1993, samples of treated water from both plants were negative for coliforms and were within the limits established by the Wisconsin Department of Natural Resources for water quality (Mac Kenzie et al., 1994b).

FIGURE 2-13

Maximum daily raw and treated water turbidity at the southern Milwaukee Water Works treatment plant, March-April 1993. SOURCE: Wisconsin Division of Public Health (unpublished).

A federal Environmental Protection Agency (EPA) water engineer inspected both Milwaukee water treatment plants and found them to meet existing state and federal water quality standards at the time of the outbreak. However, at the southern plant the water quality data showed a marked increase in turbidity, which reflected poor filtration. The turbidity was measured every eight hours—the minimum amount required by authorities for routine monitoring. Prior to the outbreak, water turbidity was not generally viewed as a potential indicator of protozoan contamination.

The EPA inspector also found that, to decrease the costs of chemicals used in water treatment, the Milwaukee plants recycled water used to backflush and clean their sand filters. This backflushed water (backwash) containing whatever was caught by the sand filter was added to source water coming into the plant rather than being discharged into a sewer. Over time, such recycling of backwash effectively increases the concentration of any contaminant in the water being treated by the plant and increases the risk that the sand filters may not effectively remove the contaminant (Mac Kenzie et al., 1994b).

Weather conditions prior to the outbreak were also very unusual (Addiss et al., 1995; Mac Kenzie et al., 1994b). An extremely high winter snowpack had melted rapidly while the frostline remained high, resulting in high runoff containing greater-than-usual levels of organic material. There was also extraordinarily heavy rainfall during March and April, that exceeded the previous record for the period between March 21 and April 20 (set in 1929) by 30 percent.

At the time of the outbreak, during periods of heavy rain, Milwaukee’s storm sewers frequently overflowed. During these periods, sewage was chemically disinfected but otherwise bypassed full sewage treatment (Figure 2-14). Thus, during periods of high flow, the storm sewer and sanitary sewer water that bypassed treatment then emptied into an area within a breakfront on Lake Michigan, just north of the intake for the south water plant as may be seen in Figure 2-15 and further depicted in Figure 2-16.

FIGURE 2-14

Milwaukee skyline demonstrating confluence of rivers merging just west of the Milwaukee harbor. The Milwaukee Metropolitan Sewage District plant is located on the land just south of the convergence (more…)

FIGURE 2-15

Milwaukee River emptying into the Lake Michigan harbor following a period of high flow and attendant creation of a plume. Note the breakfront and the southerly movement of the plume. SOURCE: Image (more…)

FIGURE 2-16

Location of the three rivers that flow through Milwaukee County, Wisconsin, the breakfront protecting the city of Milwaukee harbor and the northern and southern Milwaukee Water Works water treatment (more…)

At the same time, high and frequent northeasterly winds (an unusual wind direction in Milwaukee) probably accentuated the southerly flow of water out of the breakfront and toward the intake for the southern water plant (Figure 2-16). The winds also forced the water within the breakfront closer to the lakeshore, accentuating plumes of storm water and treated sewage that flowed through gaps in the breakfront toward the nearby south plant intake grid (Addiss et al., 1995).

At the southern water plant, personnel lacked experience with dosing the new coagulant in response to spikes in finished water turbidity. By the time the decision was made, on April 2, to resume the use of alum as the coagulant, treated water was already significantly contaminated with Cryptosporidium oocysts.

We also became aware of an additional factor that was of potential importance. In early 1993, a university in central Milwaukee was constructing new soccer fields. The drainage from these fields was directed into a small storm sewer that had to be connected to a larger main sewer. When construction workers cut into the main sewer to make this connection, they discovered a large impaction of bovine entrails and other waste from a large meatpacking plant located nearby. Ensuing investigation and inspection by city officials revealed a cross connection of a sewer from the abattoir kill floor with the storm sewer. This cross-connection existed for years, and these wastes accumulated over a prolonged time. Following correction of the cross-connection, removal of the impacted wastes and hauling the wastes away occurred in early March. Potentially some of these disrupted wastes could have been discharged through the storm sewer directly into the Menomonee River or directly reach the sewage treatment facility following correction of the cross connection. While it is not clear whether the existence and correction of the cross-connection and clean-up of the sewer influenced this outbreak, it was an issue that was addressed during the investigation.

The Ultimate Source of These Cryptosporidium Oocysts: Animals or Humans

As previously noted, as few as 10 Cryptosporidium hominis oocysts constituted an ID50 in adult volunteers (Chappell et al., 2006). An infected person persistently excretes billions of oocysts over an extended period. The mounting numbers of people ill with watery diarrhea, each of whom were likely excreting billions of oocysts into sanitary sewers during the course of their illness, placed rapidly increasing demand on the MWW water treatment system and perpetuated an explosive cycle of Cryptosporidium-related oocyst ingestion, illness, and oocyst amplification. Additionally, oocysts can remain infective in moist environments for two to six months (Fayer, 2004). The opportunity for infection in this outbreak was, therefore, both inordinately high and sustained.

From the random digit dialing survey, we determined that the highest attack rates of watery diarrhea occurred in people aged 30 to 39 years (Mac Kenzie et al., 1994b). These tended to be working adults, many of whom were commuting from lower risk to higher risk places. Using age-related results of the random digit dialing survey we estimated an attack rate of 18 to 20 percent among children under the age of 10 years, and less than 15 percent among independent-living individuals over 70 years of age (Mac Kenzie et al., 1994b).

Subsequently, the frequency of asymptomatic infection among exposed Milwaukee residents was demonstrated to be very high. Although there was no reliable serologic test for Cryptosporidium at the time of the outbreak, stool and serum samples were collected from volunteers during this period. Sera from children who had blood tests for lead levels around the time of the outbreak were preserved; later, when a serologic test for Cryptosporidium became available, these sera were tested (McDonald et al., 2001). The serologic study in children revealed that the prevalence of anti-cryptosporidium antibodies from southern Milwaukee increased from 7 percent prior to the outbreak to approximately 80 percent after the outbreak, indicating that most children were infected and many infections were asymptomatic. These data also supported prior studies implicating the southern plant. Interestingly, the prevalence of anti-cryptosporidium antibody in Milwaukee children tested in 1998 was 7 percent, indicating transmission had returned to baseline. The results demonstrated that the Milwaukee outbreak affected considerably more people than we had previously estimated (McDonald et al., 2001).

Efforts were made to obtain large-volume specimens of stool from volunteers with acute onset of diarrheal illness during the outbreak, store the specimens in potassium dichromate, and hopefully maintain viable oocysts for isolation and subsequent analysis. Only five specimens were obtained, including three from patients with AIDS—one of which was obtained in 1996 from a patient with chronic infection who initially was infected during the 1993 outbreak (Peng et al., 1997). CDC investigators purified oocysts from isolates obtained from stools of four of the volunteers. Approximately one million oocysts from each specimen were orally administered to two-day-old calves or four- to six-day-old BALBc or severe combined immunodeficient (SCID) mice. Further, the oocysts were ruptured, parasitic DNA was harvested, specific fragments were amplified, and the DNA was sequenced and analyzed. None of the isolates established infections in calves and mice, suggesting these were not bovine strains. Isolates in the overall study could be divided into two genotypes of, at that time, Cryptosporidium parvum, on the basis of genetic polymorphism at one locus; the four Wisconsin isolates were similar to isolates observed only in humans that were noninfective in cows and mice (genotype 1). The other genotype (genotype 2) was infective in calves or mice (Peng et al., 1997). Thus, as noted by the authors of the study, the genotypic and experimental infection data from the four isolates examined suggest a human rather than bovine source. However, the results come from the analysis of only four samples from a massive outbreak, and the degree to which these samples are representative of the entire outbreak remains uncertain.

In later studies, C. parvum genotype 1 became known as C. hominus (Morgan-Ryan et al., 2005). Infection with a strain that was human adapted rather than bovine adapted is consistent with the massive numbers of human illnesses and asymptomatic human infections noted in this outbreak.

Peng et al. noted that possible sources of Lake Michigan’s contamination with Cryptosporidium oocysts included cattle along two rivers that flow into the Milwaukee Harbor, slaughterhouses, and human feces (Addiss et al., 1995; Mac Kenzie et al., 1994b; Peng et al., 1997). At that time cattle had been the most commonly implicated source of water contamination in Cryptosporidium outbreaks outside the United States, but not conclusively within the United States (Peng et al., 1997). Measures for preventing water contamination have in some cases included the removal of cattle from watershed areas in or around municipalities. If, however, sewer overflows and inadequate sewage treatment are the primary source of water contamination in urban settings where anthroponotic7 cycles were maintained, focusing only on cattle could fail to eliminate a very important source of infection (Peng et al., 1997). In the Milwaukee outbreak, the latter point is very important because of the combined sewer overflows (prolonged high flow interval in March and April 1993) and attendant inadequate sewage treatment and the anthroponotic nature of the outbreak.

Lessons Learned

Among the many lessons learned from the 1993 Milwaukee Cryptosporidium outbreak, the following lessons and needs stand out:

- Consistent application of stringent water quality standards. At the time of the outbreak, drinking water was regulated either by the EPA or by individual states, as was the case in Wisconsin, and the MWW water treatment and quality testing results were in compliance with all state and federal standards. Existing state and federal standards for treated water were insufficient to prevent this outbreak (Mac Kenzie et al., 1994b). Moreover, it is important to use measures of turbidity in treated water as an indicator of potential contamination rather than viewing turbidity as an aesthetic measure of clarity. Consistent application of stringent water quality standards was needed. More stringent federal water quality standards, which had been under development for several years, were implementled shortly after this massive waterborne outbreak (Addiss et al., 1995). The vastly improved attention to monitoring and to the quality of water filtration is a powerful impact of this investigation.

- Application of technical advances to monitor water safety and minimize the amount of inadequately filtered water to the public. The post-filtration turbidity (and particle counts, if possible) of treated water should be monitored continuously for each filter to detect changes in filtration status. Alarm systems for each of the filters and particle counting devices are available to detect spikes in particles in the size range inclusive of Cryptosporidium oocysts and facilitate rapid filter shutdown and diversion of potentially contaminated water when thresholds are reached (Mac Kenzie et al., 1994b).

- Testing of source and finished water for Cryptosporidium. This was needed to detect risk for an outbreak and to determine when the water was safe to drink afterward. At the time of the outbreak, the sampling process for such testing was difficult and lengthy, and it was not standardized. Improved means of sampling and testing source and finished water for Cryptosporidium were needed.

- Environmental studies. A coordinated plan was needed to investigate the environment following a waterborne outbreak of Cryptosporidium infection, but not many such events had occurred. Thus, we had to overcome considerable challenges, particularly regarding designing, funding, and mobilizing appropriate studies relevant to human health. There needed to be equipment and trained human capacity for rapid deployment of specimen collection followed by prompt testing.

- Surveillance. Cryptosporidium infection was not a reportable public health condition at the time of the outbreak. Watery diarrhea proved to be a good case definition for Cryptosporidium infection in an outbreak setting; a more refined clinical case definition was necessary to detect sporadic cases. The random-digit dialing surveys were very valuable in assessing the scope and progress of this large community outbreak; and nursing home surveillance, as described, was very effective (Mac Kenzie et al., 1994b; Proctor et al., 1998). It would have been useful to have a surveillance system in place to analyze consumer complaints to the water authority before the outbreak as this spike in complaints to the MMW was very striking; these might have focused attention on the unusual turbidity of treated water that preceded the outbreak (Proctor et al., 1998).

- Testing of human stool and serum. Because of the time and expense involved, generally only patients with HIV infection, particularly those with AIDS, were routinely tested for Cryptosporidium at the time the outbreak occurred. In addition to delay in determination of the cause of the outbreak, the infrequent use of these tests likely contributed to delay in outbreak recognition. Improved assays were clearly needed. The striking data from the serologic testing of children (McDonald et al., 2001) demonstrated the value of serologic assays to assess background occurrence and the magnitude and impacts of outbreaks Cryptosporidium infection.

- Routine assays for Cryptosporidium. Physicians, other clinicians, and public health officials clearly needed to broaden and sustain the index of suspicion for Cryptosporidium infection (Mac Kenzie et al., 1994b). This was challenging because of added costs of testing and the limited assays available at that time.

- Communication. To our advantage, we had good interagency communication and worked closely with communities of individuals at greatest risk. For example, the AIDS service organization in Milwaukee had access to over 700 case patients, and we could monitor morbidity and mortality in that population (Frisby et al., 1997). As a result of the outbreak, we developed targeted public health messages and shared them with other health departments. However, due to insufficient understanding of the pathogen and its public health effects, we lacked guidelines for governmental response to findings of oocysts, increased turbidity of finished water, and elevated particle counts in finished water. During our investigation, we worked to establish interagency coordination to remedy this situation.

- The media. Electronic and print media were essential to communicating risk and delivering other important public health messages during the outbreak, and in particular facilitated public inquiry by setting up phone banks. The Milwaukee Journal and the Milwaukee Sentinel jointly produced an issue in Spanish to inform a large segment of non-English speakers about the outbreak, and they maintained a help line in Spanish as well. The media published treated water turbidity data on a regular basis, which was especially helpful to individuals with HIV infection and AIDS. Daily news conferences and televised updates occurred through the lifting of the boil water advisory on April 14 and related articles appeared daily in the news for weeks.

- Other lessons. The many outbreak-related studies conducted during the time of the outbreak yielded other lessons on the clinical spectrum of cryptosporidiosis, epidemiologic features of cryptosporidiosis, effectiveness of control measures in specific subpopulations, effectiveness of preventive measures, and the economic impact of such a massive outbreak. Recurrence of diarrhea after a period of apparent recovery was documented frequently—in 39 percent of persons with laboratory-con-firmed Cryptosporidium infections (Mac Kenzie et al., 1995b), a finding that has implications for patient counseling. A study among HIV-positive persons found that the severity of illness, but not the attack rate, was significantly greater in persons with HIV infection (Frisby et al., 1997). Among young children attending daycare centers, asymptomatic or minimally symptomatic Cryptosporidium infection was frequent (Cordell et al., 1997), a fact that may have contributed to several outbreaks associated with recreational water in other parts of the state several months after the Milwaukee outbreak (CDC, 1993; Mac Kenzie et al., 1995a). Surveillance studies revealed the potential usefulness of monitoring sales of over-the-counter antidiarrheal drugs as an early indicator of community-wide outbreaks (Proctor et al., 1998); the effectiveness of control measures and the absence of drinking water as a risk factor for the relatively low level of transmission during the post-outbreak period (Osewe et al., 1996); the importance of testing more than one stool specimen (Cicirello et al., 1997); the usefulness of death certificate review for estimating outbreak-related mortality (Hoxie et al., 1997); and the effectiveness of point-of-use water filters with pore diameters of less than 1 micron (Addiss et al., 1996). Additionally, a detailed cost analysis revealed the enormous economic impact of the outbreak (Corso et al., 2003).

Conclusions and Outcomes

The 1993 Milwaukee cryptosporidiosis outbreak was the largest documented waterborne disease outbreak in the United States. Cryptosporidium oocysts in untreated water from Lake Michigan that entered the plant were inadequately removed by the coagulation and filtration process at the Milwaukee southern water treatment plant. Water quality standards were inadequate to prevent this outbreak. There was a lack of laboratory testing for Cryptosporidium, which delayed recognition of the microbial etiology of the outbreak. While the environmental source of the oocysts in this outbreak is not specifically known, limited data from genotyping of Cryptosporidium DNA from a small number of human stool specimens obtained during the outbreak supports the hypothesis that the environmental source of the oocysts was human. Nonetheless, there was a confluence of important factors that contributed to the occurrence of this massive outbreak (Mac Kenzie et al., 1994b). How oocysts ultimately made their way from sewers into water feeding the intake of the southern water treatment plant (e.g., by sewage overflows related to heavy rains, cross-connections, or inadequate treatment at the sewage treatment plant located at the mouth of the Milwaukee River and facilitated by unusual wind conditions) is unknown.

Based on these conclusions and the opinions of EPA staff and other consultants, the MWW instituted continuous turbidity monitoring in all of its filters. They also put alarms on the filters to enable automatic shutdown if turbidity reached a threshold level, and they set and achieved the goal of maintaining turbidity at a very low level. The MWW modified and improved water treatment procedures and adopted very stringent water quality standards. Substantial enhancement of the filter beds in both treatment plants occurred and the new filter media was installed. The intake grid for raw water entering the southern plant was moved considerably further eastward. These efforts resulted in continuous production by the MWW of high-quality treated water with mean turbidities of 0.01 NTU (Mac Kenzie et al., 1994a). Furthermore, recognizing that Cryptosporidium oocysts are highly resistant to chlorine, the City of Milwaukee constructed ozonization plants at each treatment facility. Ozone disrupts the oocyst cell wall prior to disinfection.

When we studied turbidity events among Wisconsin surface water treatment plants over a 10-year period, we discovered other sites with similar challenges. For example, during the months of February through April, turbidity events occur frequently on Lake Michigan; these events affect all treatment plants that use water from this lake (Wisconsin Division of Public Health, unpublished data).

We advocated increasing Cryptosporidium testing of stools from persons with watery diarrhea and made Cryptosporidium infection a reportable condition (Hayes et al., 1989). Annually, the DPH receives about 450 reports of Cryptosporidium infection: some are recreational, some are connected with agriculture, but rarely do they originate in Milwaukee (Wisconsin Division of Public Health, unpublished data). We also advocated including Cryptosporidium testing in federal rules that stipulated the collection of information on both raw water sources and finished water (Juranek et al., 1995).

The massive Milwaukee waterborne Cryptosporidium outbreak, and the resulting modification of the city’s water treatment facilities, has received attention from water authorities worldwide. The attendant events and actions have been very instructive.Go to:

PREVENTION IS PAINFULLY EASY IN HINDSIGHT: FATAL E. Coli O157:H7 AND CampylobaCtEr OUTBREAK IN WALKERTON, CANADA, 2000

Steve E. Hrudey, Ph.D. University of Alberta Elizabeth J. Hrudey University of Alberta

Summary

In May 2000, a comfortable rural community of about 4,800 people in Canada’s largest province (Ontario) experienced an outbreak of waterborne disease that killed seven people and caused serious illness in many others. The contamination was ultimately traced to a source that had been identified 22 years earlier as a threat to the drinking water system, but no remedial action was taken to manage the public health risk. The operators of the system were oblivious to this danger and the regulators responsible for safe drinking water largely overlooked the problems that existed. Even as the outbreak unfolded the regulatory response was slow and unfocused, suggesting a serious loss of capacity to regulate drinking water safety had occurred in Ontario.

Introduction

Walkerton, located about 175 km northwest of Toronto, Ontario, Canada, experienced serious drinking water contamination in May 2000. The facts of this account are drawn from the Walkerton Inquiry (the Inquiry), a $9 million public inquiry into this disaster called by the Ontario Attorney General (O’Connor, 2002a). This disaster has resulted in a complete overhaul of the drinking water regulatory system in Ontario.

What Happened in Walkerton

Walkerton was served by three wells in May of 2000, identified as Wells 5, 6, and 7. Well 5 was located on the southwest edge of the town, bordering adjacent farmland. It was drilled in 1978 to a depth of 15 m with 2.5 m of overburden and protective casing pipe to 5 m depth (O’Connor, 2002a). The well was completed in fractured limestone with the water-producing zones ranging from 5.5 to 7.4 m depth and it provided a capacity of 1.8 ML/d that was able to deliver ~56 percent of the community water demand. Well 5 water was to be chlorinated with hypochlorite solution to achieve a chlorine residual of 0.5 mg/L for 15 minutes contact time.

Well 6 was located 3 km west of Walkerton in rural countryside and was drilled in 1982 to a depth of 72 m with 6.1 m of overburden and protective casing to 12.5 m depth (O’Connor, 2002a). An assessment after the outbreak determined that Well 6 operated from seven producing zones with approximately half the water coming from a depth of 19.2 m. This supply was judged to be hydraulically connected to surface water in an adjacent wetland and a nearby private pond. Well 6 was disinfected by a gas chlorinator and provided a nominal capacity of 1.5 ML/d that was able to deliver 42 to 52 percent of the community water demand (O’Connor, 2002a).

Well 7, located approximately 300 m northwest of Well 6, was drilled in 1987 to a depth of 76.2 m with 6.1 m of overburden and protective casing to 13.7 m depth (O’Connor, 2002a). An assessment following the outbreak determined that Well 7 operated from three producing zones at depths greater than 45 m with half the water produced from below 72 m. A hydraulic connection discovered between Well 6 and Well 7 reduced the security of an otherwise good-quality groundwater supply. Well 7 was also disinfected by a gas chlorinator and provided a nominal capacity of 4.4 ML/d that was able to deliver 125 to 140 percent of the community water demand (O’Connor, 2002a).

From May 8 to May 12, Walkerton experienced ~134 mm of rainfall, with 70 mm falling on May 12. This was unusually heavy, but not record, precipitation for this location. Such rainfall over a 5-day period was estimated by Environment Canada to happen approximately once in 60 years (on average) for this region in May (Auld et al., 2004). The rainfall of May 12, which was estimated by hydraulic modeling to have occurred mainly between 6 PM and midnight, produced flooding in the Walkerton area.

Stan Koebel, the general manager of the Walkerton Public Utilities Commission (PUC), was responsible for managing the overall operation of the drinking water supply and the electrical power utility. From May 5 to May 14, he was away from Walkerton, in part to attend an Ontario Water Works Association meeting. He had left instructions with his brother Frank, the foreman for the Walkerton PUC, to replace a nonfunctioning chlorinator on Well 7. From May 3 to May 9, Well 7 was providing the town with unchlorinated water in contravention of the applicable provincial water treatment requirements.

From May 9 to 15, the water supply was switched to Wells 5 and 6. Well 5 was the primary source during this period, with Well 6 cycling on and off, except for a period from 10:45 PM on May 12 until 2:15 PM on May 13 when Well 5 was shut down. Testimony at the Inquiry offered no direct explanation about this temporary shutdown of Well 5. No one admitted to turning Well 5 off and the supervisory control and data acquisition (SCADA) system was set to keep Well 5 pumping. Flooding was observed near Well 5 on the evening of May 12 because of the heavy rainfall that night, but why or how Well 5 was shut down for this period remains unknown.

On May 13 at 2:15 PM, Well 5 resumed pumping. That afternoon, according to the daily operating sheets, foreman Frank Koebel performed the routine daily checks on pumping flow rates and chlorine usage, and measured the chlorine residual on the water entering the distribution system. He recorded a daily chlorine residual measurement of 0.75 mg/L for Well 5 treated water on May 13 and again for May 14 and 15. Testimony at the Inquiry indicated that these chlorine residual measurements were never made and that all the operating sheet entries for chlorine residual were fictitious. The monitoring data were typically entered as either 0.5 mg/L or 0.75 mg/L for every day of the month.

On Monday, May 15, Stan Koebel returned and early in the morning turned on Well 7, presumably believing that his instruction to install the new chlorinator had been followed. When he learned a few hours later that it had not, he continued to allow Well 7 to pump into the Walkerton system, without chlorination, until Saturday, May 20. Well 5 was shut off at 1:15 PM on May 15, making the unchlorinated Well 7 supply the only source of water for Walkerton during the week of May 15. Because the Well 5 supply was ultimately determined to be the source of pathogen contamination of the Walkerton drinking water system, the addition of unchlorinated water into the system from Well 7 would have failed to reduce the pathogen load by any means other than dilution.

PUC employees routinely collected water samples for bacteriological testing on Mondays. Samples of raw and treated water were to be collected from Well 7 that day along with two samples from the distribution system. Although samples labeled Well 7 raw and Well 7 treated were submitted for bacteriological analyses, the Inquiry concluded that these samples were not taken at Well 7 and were more likely to be representative of Well 5. Stan Koebel testified that PUC employees sometimes collected their samples at the PUC shop, located nearby and immediately downstream from Well 5, rather than traveling to the more distant wells (~3 km away) or to the distribution system sample locations.

During this period, a new water main was being installed (the Highway 9 project). The contractor and consultant for this project asked Stan Koebel if they could submit their water samples from this project to the laboratory being used by the Walkerton PUC for bacteriological testing. Stan Koebel agreed and included three samples from two hydrants for the Highway 9 project. On May 1, the PUC began using a new laboratory for bacteriological testing, a lab the PUC had previously used only for chemical analyses.